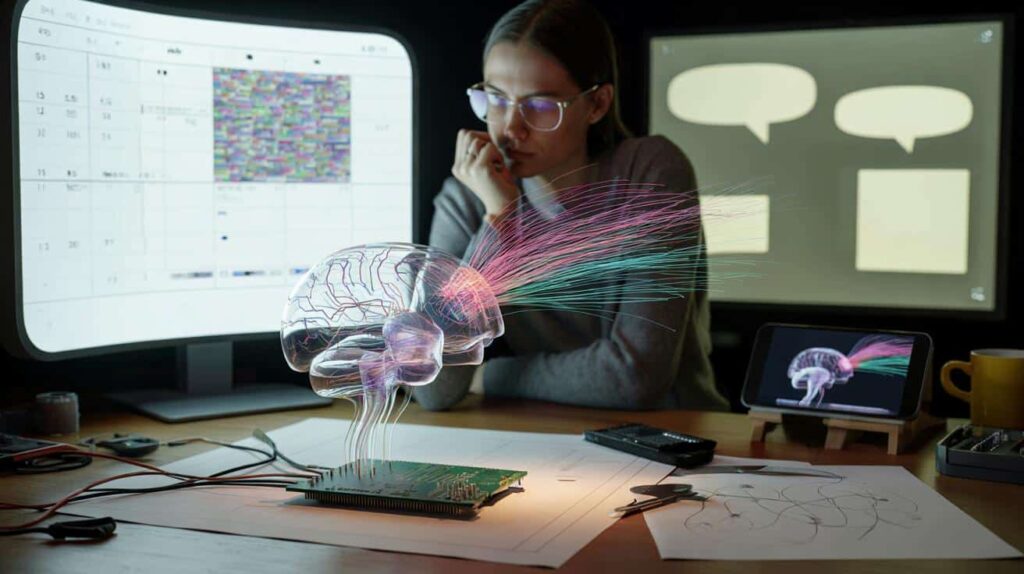

A new generation of brain-inspired AI offers something more ambitious: systems that learn in real-time, save energy, and adapt like our brains do. The scientists behind a new prototype claim it not only tracks moving targets—it surpasses the current tools we use, including ChatGPT, in tasks that are constantly changing. The gamble is straightforward yet bold: replicate the brain’s methods, not just its results. If they succeed, our everyday interactions with AI will feel different.

I’m in a late-night lab surrounded by coffee-stained benches and a whiteboard filled with sketches of dendrites. A researcher taps a touchpad, and the model’s interface lights up: a flow of events, not a fixed prompt, resembling a nervous system in action. We’ve all experienced that moment when your plan shifts mid-sentence and your tools can’t keep pace—this system remains unfazed. *It felt less like software and more like a nervous system coming to life.* The researcher alters the rules of a task midway, and the model hesitates for a moment, then updates itself without needing retraining or rebooting. It poses a question back. Just one, and it’s the correct one.

A brain that computes in moments, not monologues

Most chatbots operate in lengthy monologues: input a prompt, receive an answer, and then it’s over. This system thinks in moments. It processes spikes and events—small changes—allowing it to respond as reality unfolds. Observing it in action feels surprisingly human: a brief silence, a revision, then the appropriate action. It utilizes short-term memory like a scratchpad and longer loops that condense patterns over time. That brief pause isn’t a delay; it’s a deliberate choice. There’s a subtle discipline to it, akin to how a person picks up a pen and decides what to omit.

During a live demonstration, a calendar began to fill with overlapping meetings, unexpected reschedules, and a sudden “no calls after 3 p.m.” rule. The model didn’t merely follow commands; it restructured the rule set and clarified the trade-offs before proceeding. **In side-by-side comparisons, the team’s prototype outperformed a GPT-style model on noisy audio transcriptions and rapidly changing tasks, according to their internal records.** The difference wasn’t flashy. It was practical: fewer regressions, fewer apologies, and more adept management of changes—like a driver taking a new route while maintaining a conversation.

The key isn’t a larger transformer. It’s an architecture that resembles biology more closely: sparse activations, local plasticity rules, and a predictive loop that continuously compares expectations with actual outcomes. Spiking computation means it activates only when something changes, providing speed and efficiency without extra cost. A compact “working memory” retains fresh context while a slower consolidation process distills what’s important. This separation allows it to adapt without catastrophic forgetting. It’s not magic; it’s mechanics. Prediction condenses reality. Correction maintains integrity.

How to communicate with an AI that keeps learning

Teach in small increments, then evaluate. Begin by framing your request as a stream: updates, exceptions, signals. Ask it to articulate the rule it believes you’re using, then modify one aspect and observe how it adjusts. Encourage it to practice the new rule with a fresh example before you place your trust in it. **Treat it like a colleague with a working memory, not a genie you command.** When the task concludes, instruct it to “sleep” on the session—initiate a brief consolidation step that transforms today’s noise into tomorrow’s insight.

Common errors with brain-inspired systems are familiar: cramming prompts with rigid instructions or switching contexts so rapidly that the model never stabilizes. Provide it with a runway. Allow it to rephrase your objective in its own terms, then guide gently, don’t force. When it hesitates, inquire about its uncertainties instead of repeating the same prompt louder. Be considerate of your future self—identify the behaviors you desire and refer to them by name next time. Let’s be honest: not everyone does that daily. Establishing the habit once will save you numerous headaches later.

Consider fragility as a neuroscientist would: where could the rule fail, and how will we recognize it? Encourage the model to identify drift and suggest a test to catch it early.

“We didn’t create a parrot,” one researcher explained to me. “We developed a system that anticipates changes in the world, then makes that expectation useful.”

- Start sessions with: “State the current rule, then outline what could cause it to change.”

- Utilize a rolling memory: “Keep the last 10 events active; archive the others.”

- Implement a quick “sleep” phase after significant edits to consolidate new patterns.

- Request a counterexample every 20 steps to avoid silent failures.

What this shift could unlock

This isn’t about topping a leaderboard. It’s about transforming the rhythm of our collaboration with machines. A brain-inspired model that learns in real-time could operate on smaller, cooler hardware, located close to where data is generated—your phone, your car, your watch. This reduces costs and enhances privacy. It also redefines jobs: less prompt engineering gymnastics, more conversational debugging. Education might shift towards iterative teaching rather than static answers. And yes, claims of “surpassing ChatGPT” require a realistic interpretation: tasks in motion, noisy inputs, open-ended objectives. That’s a different scoreboard. **The next breakthrough may not involve larger models, but rather models that forget effectively.** The true test occurs outside the lab, where individuals change their minds mid-sentence.

| Key Point | Detail | Reader Interest |

|---|---|---|

| Event-driven thinking | Spiking-style updates, local plasticity, predictive loops | Faster, calmer responses when situations change |

| On-device potential | Sparse computation works well with neuromorphic and mobile chips | Lower latency, improved privacy, longer battery life |

| Human-like workflow | Teach-test-sleep routine, rolling memory, drift checks | Fewer retries, clearer rules, more reliable results |

FAQ :

- What is “brain-inspired” AI in simple terms?It’s software that takes cues from biology—using spikes instead of constant chatter, short-term and long-term memory, and a loop that predicts and then corrects. The aim isn’t to replicate neurons, but to emulate the feeling of adaptation: quick, efficient, and stable over time.

- Does it truly outperform ChatGPT?For specific tasks that change in real-time—noisy inputs, shifting rules—the team reports better performance and smoother recovery when things deviate from the script. For static Q&A or broad knowledge, GPT-style models still excel. Different contexts, different champions.

- Will it operate on my laptop or phone?That’s the promise. Because it computes sparsely—activating only on change—it pairs well with compact hardware. Early versions already run on small boards; mainstream phones and laptops are a feasible next step as toolchains evolve.

- How is it different from a transformer-based chatbot?Transformers are great at completing patterns with large, one-time passes over text. This system functions as a stream, updating small memories and rules as events occur. It’s less about providing one perfect answer and more about adapting gracefully over minutes, hours, or days.

- What about privacy, bias, and safety?On-device processing can minimize data exposure, which enhances privacy. Bias doesn’t disappear; it shifts. You’ll need clear logs, drift tests, and red-team prompts integrated into your workflow. Safety is a practice, not just a feature toggle.